Last month we held an event called Sounds Amazing at the 主播大秀 Radio Theatre in London. It brought together experts in audio production and technology from across the 主播大秀 and the wider industry. . This post discusses the event and goes into the research behind it, showcasing our recent activity.

Sounds Amazing was a great event, celebrating innovation in audio鈥攂oth the creative and technical aspects. It was hosted by the brilliant 主播大秀 Click presenter and music technologist L J Rich. We heard from leading practitioners, including the BAFTA award winning sound team behind Blue Planet II, and got insights from commissioners about future directions. The 主播大秀 Academy's , which discusses these sessions in more detail, as well as the fascinating panel on voice-controlled devices and the inspiring introduction by our Chief Technology & Product Officer, Matthew Postgate.

For me, one of the highlights was , who is the head of the . I've long been a fan. He gave a very thought-provoking talk on the way that technology could radically change our creative use of sound, if only we could learn to listen critically to the world around us.

Spatial Audio Production

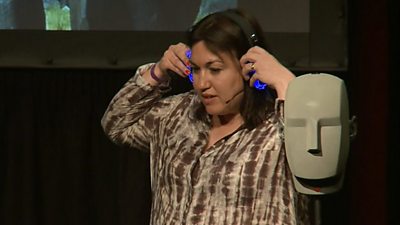

主播大秀 R&D's research sound engineers Cathy Robinson and Tom Parnell were there too. They gave insightful talks explaining spatial audio and how it is created, including live demos with a dummy head microphone and a silent-disco-style headphone system. The Academy has posted with Cathy and Tom after the event, along with some of the other great speakers.

We are steadily making more spatial audio content at the 主播大秀, particularly binaural for headphone listeners. Last year we began creating live streams of the 主播大秀 Proms in binaural sound, which we're planning to do again this year. You can read about our production process for these concerts in this recent and listen to the results . We are also working with the 主播大秀 Academy to create online guides and face-to-face courses to train our sound engineers and producers in this area.

Technology Expo

We also held a technology expo alongside the talks in the Radio Theatre, showcasing the exciting developments coming out of the 主播大秀 Audio Research Partnership, as well as the latest consumer audio technology from the team. It's worth listing the projects involved, as they cover an amazing array of fantastic audio research:

- The tech expo was organised by the project (more on this below), showcasing the latest developments into immersive and personalised object-based audio systems.

- The project showed how sound design techniques can be used to rethink accessibility to film and television for visually impaired audiences.

- The University of York's Audio Lab also showcased their work on immersive audio technology for VR/AR鈥攚e previously collaborated on the project in this area.

- The project showed enhancing sound effect library search by automatically adding timbral tags鈥攈ear how 'explosion' effects can be automatically ordered according to how 'deep' & how 'hard' they sound.

- The large-scale project (Fusing Audio and Semantic Technologies) presented tools for and .

- Speech interfaces are fundamentally changing how we interact with technology, but computer voices still sound artificial. The project, a collaboration between the University of Edinburgh and 主播大秀 News Labs, is using the latest research to make individualised & natural computer speech, particularly for less popular languages.

- The collaborative EU project, which involved 主播大秀 R&D, demonstrated object-based audio experiences using the innovative Orpheus app and The Mermaid's Tears interactive audio drama.

We also showcased the wildlife film , which was produced with a stunning 3D spatial audio soundtrack, alongside some great listening demos by S3A.

S3A Spatial Audio Project

Sounds Amazing was a co-production between the 主播大秀 Academy, 主播大秀 R&D and the project.

S3A is a major five-year project funded by the Engineering and Physical Sciences Research Council (). The goal of the project is to deliver a step-change in the quality of audio consumed by the general public, using novel audio-visual signal processing to enable immersive audio to work outside the research laboratory in everyone鈥檚 homes. It is a collaboration between 主播大秀 R&D and the Universities of , and .

The project has achieved a vast amount of research output in the last four years. Some recent highlights include:

- A on how sound engineers work to achieve envelopment in spatial audio mixing. A sense of envelopment has been shown as a key benefit of spatial audio and this work helps us to understand how producers can achieve this and how it could be enhanced.

- on automatically selecting the speech-to-background ratio to maintain intelligibility. A key benefit of object-based audio is the ability to adjust dialogue level to suit the end listener. These techniques help us to model speech intelligibility and automatically adapt to improve it.

- that captured how sound engineers would adapt the mix of object-based audio when rendered to different loudspeaker systems. An advantage of object-based audio systems is that they can be adapted automatically to the sound system available in your home. This work is helping us to improve this process.

- , with many authors from across the project, presenting the overall vision of S3A: an end-to-end audio-visual system for object-based audio. It highlights the principals and architecture of the system that the project has built to drive its applied research, as well as some novel applications of the system in content production.

The whole range of great academic output from the project can be found here.

S3A was also involved in the production of The Turning Forest and continues to apply its cutting-edge research to content production, allowing us to demonstrate immersive spatial audio to 主播大秀 audiences. We're currently working on a new concept that we're calling "media device orchestration" (MDO), which links to our work on synchronising devices. The aim is to deliver some of the benefits of spatial audio in a low cost accessible way, using existing sound-emitting devices that many people already have in their homes. from the University of Salford gave a talk at Sounds Amazing that explains the idea, the research we've been doing, and the content production that we're currently working on. (Headphones at the ready, there's an on-stage binaural demo!)

We are really looking forward to sharing our first MDO production, The Vostok K Incident, with you in the near future.

Update: We have a new in the June issue of the Journal of the Audio Engineering Socity on Media Device Orchestration.

Now & Next

Back in 2015 we held a similar event to Sounds Amazing called Sound Now & Next. We've previously shared a report and videos from that event. We hope to do another event like this in a couple of years' time to keep the production community in touch with the latest audio innovations. In the meantime, keep your eyes on this blog鈥攚e'll share more news about our activity soon.

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.