Maintaining Mobile: Update on Mobile Compatibility Programme

Steven Cross

Senior Test Engineer

Tagged with:

With the proliferation of mobile devices and platforms now available, making sure 主播大秀 Apps always work as intended can be a challenging job. Steven Cross is the Test Lead for the Mobile Compatibility Programme, and explains how the team looks to ensure our native mobile applications work across different devices.

Overview

The Mobile Compatibility Programme (MCP) has been running for the past couple of years and was originally formed to serve as an early warning system for software development teams - a.k.a 'product teams' - to give them a heads up when a particular issue affecting one of our popular native mobile applications had been identified on a device under test. The product teams could look to investigate and prioritise these issues for fixing where possible. I say ‘where possible’ since some issues are outside of our immediate control, owing to dependencies on manufacturers and third-party suppliers.

Scope

In a written by my colleague Paul Rutter, you'll see it spoke of an initial focus on Android devices. However, as MCP has continued to evolve our testing now routinely includes iOS devices as well, since both of these platforms have a huge audience reach and we’ve worked hard to ensure feature parity across different apps.

The MCP covers a number of different work streams, largely due to the way we have grown and evolved as a team, looking to bring about as much value as possible. By way of a summary, these different work streams are listed below:

- Testing devices sent to us directly from the manufacturer. These can include preproduction devices which have not yet hit the market and more often than not mean we are asked to respect any confidentiality agreements.

- Testing devices that we decide to purchase either because they are proving popular with users and/or we have had reports of specific problems affecting a given device.

- Testing new versions of operating systems released by Apple (iOS), Google (Android), and Amazon (Fire OS). We will also test different operating systems – e.g. Windows Mobile – when necessary.

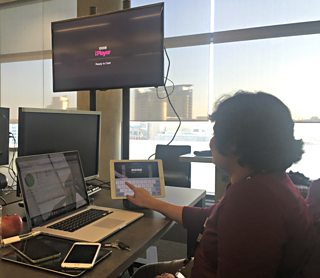

We find ourselves in a unique position of covering an expanding array of native mobile applications and invariably have a bird’s-eye view of our most popular and widely used products such as 主播大秀 iPlayer, 主播大秀 iPlayer Radio, 主播大秀 Sport, 主播大秀 Weather, 主播大秀 News, 主播大秀 Media Player, and apps developed by 主播大秀 Children’s. It is essential our test effort verifies core functionality, ensuring the most common user journeys are behaving as expected.

However, as experienced testers, we continue to be on the look out for any other issues affecting such things as app stability, usability, and performance. When prioritising which test cases need to be executed, we champion the PUMA model. A brief description of this acronym is below, with a dedicated blog post on the subject .

P - tests must prove core functionality and so be considered high value (if any fail, these would be fixed asap).

U - tests must be understandable by everyone (concise, easy to interpret, outline pre-requisites and expected behaviour).

M - tests are mandatory; they must be run and must pass.

A - this covers automation (as such test cases would be a perfect candidate for automation assuming they can be easily automated).*

*MCP currently run tests manually for the moment.

Challenges

There are a number of challenges that we face, however, it does make life interesting! Some examples are below.

Devices - The mobile world moves fast. There is a constant stream of new devices hitting the market across different platforms. So how do we prioritise which devices and which operating systems to test? We look to factor many different things into our decision making process. We look at using mobile device usage analytics across our 主播大秀 products, as well as being aware of pan-UK trends, to identify those devices that are proving to be popular with the audience and ensuring these are compatibility tested accordingly. We are pragmatic, in the sense that priorities can change and something currently in-flight can be put on hold in favour of something else e.g. a major new Operating System (O/S) version has been released for a more popular device or more popular group of devices or an exciting new pre-production device has arrived for us to look at which could be the 'next big thing'.

Operating Systems - Somewhat inevitable, however we constantly look to monitor and test upcoming versions of O/S released from Apple, Google and Amazon since we want to ensure our products continue to offer the audience the best experience possible. However, some releases are more significant than others and we pay particular attention to major releases: e.g. introducing Android ‘Marshmallow’, introducing iOS 9, etc.

We need to be wary of beta versions not being the same as what is made publicly available and look to invest more time testing the penultimate version (sometimes known as a gold master or final preview). The Android market is described as ‘fragmented’ due to the diverse array of devices which support it and how some manufacturers restrict the flow of O/S updates, so we need to be aware of what O/S version our most popular devices are running and whether an update is available or not. In contrast, the adoption rate for the latest version of iOS is usually much faster (however, as the number of different devices running iOS increases, updates tend to support those models which are most recent).

App Releases - Again, somewhat inevitable, but still a challenge for us on MCP. We test multiple native mobile applications, each of which is developed by their respective product team. These teams continue to implement new features, remove existing features, implement new versions or different types of back-end components (e.g. an Audio/Video player for playback, Digital Rights Management agent for Downloads capability, etc), incorporate a different User Interface (e.g. changing navigation, etc), refactor code (for a more efficient codebase), and generally continue to tweak and perfect the end user experience. This means we have to be across what has changed to understand expected behaviour.

Maintaining test suites (which house test cases) is vital, so the tests we execute are still valid and still verify core functionality. We regularly engage with the different product teams, inviting them to ‘demo’ what they have been working on so we get to see first hand how the app is expected to behave as well as affording us the opportunity to discuss our test results, ask questions, find out what is in the pipeline, etc. We look to keep in regular contact with each of the product teams we test on behalf of and afford them the opportunity to specify which app version we should test against.

Reporting

After completing a test plan using our test management tool, we look at collating our test results, together with any pertinent data (versions tested, screenshots, videos, crash logs, etc) ready for product teams and stakeholders alike to review. To accompany this, I usually circulate an HTML based test report, surfacing some of our key findings within a highlights section (with links to more detailed results supplied). There is also a Red, Amber, or Green (RAG) status to give an indicative view of the severity of issues found per product and the report usually gives an indication of upcoming devices.

In the interests of identifying and fixing bugs as early as possible, we look to flag high severity issues as and when these are uncovered when testing. Moreover, we make our interim results visible to product teams throughout our test execution. Our findings are well received and the respective product teams concerned will choose which aspects of our test results to investigate further and prioritise fixes accordingly, ready for the next version to hit an app store near you!

Benefits

Our test effort offers a number of advantages, which are summarised below.

Test Coverage. One device we either receive or decide to purchase can be used to benefit several different product teams. This enables them the freedom to concentrate on developing new features, etc.

Audience Reach. In turn, our work serves to cover as many of our users using their favourite devices, ensuring they have the best mobile experience possible using our popular apps across platforms. We actively monitor this by tracking our cumulative audience reach throughout the year.

Cost. By purchasing a single device for MCP purposes, this negates the need for individual product teams to source their own, thus controlling budgetary spend.

Autonomy. We are unique, in the sense that we do not follow a traditional software development process. Comprised of mainly testers, the MCP team is familiar with a host of different apps which are tested on a daily basis, bringing about a level of objectivity by staff who are empowered to recommend functional and non-functional improvements on behalf of our audiences.

I hope this has given you a useful insight into how we undertake our mobile compatibility testing.