I recently became a manager for Media Services, the team that transcodes video and audio for online playout across all 主播大秀 websites and mobile apps.

There was a time at the 主播大秀 when we thought that was a great thing to do. Such a great thing that we started applying it to everything we did. Coming up with acceptance criteria in style "Given/When/Then" format .. which we then did our best to automate using and , , , .

The thing is .. the first time you do this, it looks great. The automated check passes, everyone feels good about it. The team get a heightened sense of confidence. So you put on a continuous integration server and run it several times a day. And then you go "Well that was good! Let's do more!"

And then you do more .. and more .. and more.

Some things are difficult to automate, so you come up with complicated workarounds that nobody really understands. And the nature of acceptance criteria is that they are generally very high-level which means they require a lot of other setup and dependencies to be working. And they take a long time to run. And then one day some dependency fails so the automated check reports a failure .. but it's a one-off and it works next time, so you ignore it. And then another day a small change means a lot of your automation fails, and it becomes a pain to try and fix it all.

Time goes by, people leave the team, new people come on board but by now the automation is too complicated to set up and get working, have changed and are no longer compatible. The value that the automation provided in the beginning has long since been killed by diminishing trust.

Any of this sounding familiar? So what do you do?

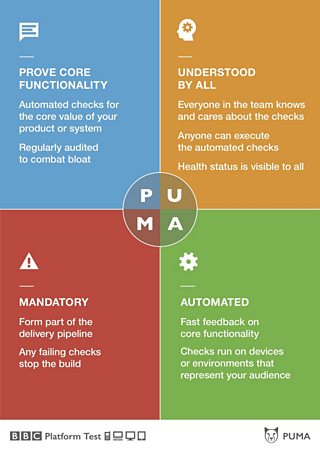

The test managers in 主播大秀 Platform Test got together to try and come up with solutions to these problems. We recognise that there is value in testing with the help of automation, but that it can be taken to unhelpful extremes. We needed to focus. We needed a PUMA.

PUMA stands for:

Prove core functionality

We want to just focus on the core of the product under test. Other automated checks may very well be peformed elsewhere, but not PUMA. This is to try to avoid the bloating that other test frameworks have suffered. PUMA checks are regularly reviewed to ensure they are still relevant, and if not, they are deleted.

Understood by all

It is important that the whole team should contribute, determining what the PUMA checks should be in the first place, and then be able to run them and interpret the results. The current status of the PUMA checks should be clearly visible. This is not just something for the testers to do .. it should provide clear and meaningful value to the entire team.

Mandatory

The PUMA checks are the ones that must always pass, because they are core value. Any failure is significant: it must stop the build. The team should work together to fix the problem quickly and get the PUMA checks passing again.

Automated

We know that automation can give fast feedback. If done right, automated checks can run regularly, consistently, reliably. If something can't be automated, then it's not a PUMA check .. or if it's core functionality then we should work to figure out a way that we could perform an automated check.

Besides the PUMA acronym, it is cool to note that pumas are fast! They can run at up to 50 miles per hour (80kph). It is important that your PUMA checks run quickly to provide fast feedback and not hold up your delivery pipeline.

My team will follow up this blog post with some case studies, and some thoughts about how you might begin to unleash the PUMA in your own organisation.

Have you experienced any of the problems mentioned above? How would you go about tackling them? What do you think of the PUMA approach?