Recently launched on the 主播大秀 Sport London 2012 website is an application developed by 主播大秀 R&D that allows you to and puts you in the heart of the action. In this blog post I hope to give an insight into development of the application and explain what's going on under the bonnet.

A slightly rotund member of the R&D team manages a world record thrashing sprint... but how?

Past Work

Just before Christmas last year I was presenting some of our sports analysis work to the 主播大秀 Future Media senior leadership team. During discussions that followed our demos, one of the leadership team talked about how he'd really like to see himself in the 100 metres. This got us thinking.

I had been demonstrating our work that investigated how could be used to enhance television sports analysis - how its tools and techniques could enhance the viewer's understanding and engagement.

In a separate project, Bruce Weir - based on our North Lab - had been working on an experimental tool called VenueVu to help visualise multiple live events occurring over an extended area.

This had been used at Wimbledon in 2011 during the live TV broadcast. When cutting between courts, VenueVu offered the ability to fly between those two courts via a computer generated model of the All England Tennis Club, blending seamlessly with the real video at each end of the flight.

While this version was a broadcast tool, an earlier version of the same tool had been designed to be used by the viewer at home and delivered to a browser. This version used Flash to place "real" videos within the "virtual" model of Wimbledon and allowed the viewer to choose when they moved from one match to another.

It occurred to us that perhaps we could combine these two projects. We could use the tools developed to combine the real and virtual worlds within a viewer's web browser to put the viewer inside the 100m. This would not only be fun, but they might also gain an insight into the event and to the athletic achievement and performance that the science of biomechanics seeks to explain.

Placing virtual objects into scenes has been the stock in trade of the work of Production Magic section at 主播大秀 R&D, from election night graphics through to the Piero sports graphics system - however this would be the first time that the viewer at home would be able to interact with the virtual objects.

In order to mix the virtual and the real, it is necessarily to know exactly where the camera is in the real world and how it is orientated. In football and tennis this process is helped by the regular pitch and court markings from which the camera view can be extrapolated. Athletics, however, features a more difficult environment where there are fewer suitable lines to track. Thankfully we have been working in this area over the last few years and developed a to allow the camera to track across them. Putting all these different projects together we started to develop a prototype.

Bringing the Projects Together

Tracking

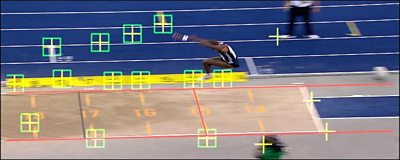

The system relies on knowing where the camera is positioned and pointed throughout the video sequences. This "calibration" must be prepared as an offline process in advance. To do this we first mark out the positions of a few key features in the image with their known equivalents in the real world; the system then calculates a camera pose and position from where these correspondences would make sense. We can then track the camera movement from frame to frame to work out the pose over the rest of the sequence.

The above image shows the tracking and calibration process in action on a triple jump. The red lines have been drawn on areas of the image where the real world position is known. The yellow crosses mark areas of texture than can be tracked from frame to frame to determine camera movement.

Rendering

The web application was built using Flash and the open-source 3D graphics library . The video sequences the application plays were converted into FLV format with the video encoding undertaken by the . The camera pose calibration data was embedded into the resulting file as frame-by-frame 'ScriptData' tags, time-stamped for 'presentation' with the same timestamp as the video frame to which they correspond. This camera pose data is made available to the Flash application during video playback via a handler method which is triggered whenever a ScriptData tag is encountered. The handler extracts the embedded camera pose data and uses it to control the pose of an Away3D Camera3D object modified to generate the correct transform for converting the 3D model coordinates into the screen coordinates when provided with a camera pose, field of view and aspect ratio. This method also initiates the render pass which ensures that the video updates and 3D overlay updates happen simultaneously. The 3D model elements are positioned in the scene graph such that they are rendered after the video image; this means that they will always appear in the foreground.

The 3D model elements were designed using and exported as FBX files. Prefab3D was then used to convert the models into raw ActionScript which could be used directly by the application.

The texture mapping on the user's avatar uses two maps. The head's texture is applied separately with the face designed to be mappable with a simple planar projection from the front in order to allow a photo to be used as a texture without needing any special processing. The default face is based on a composite 'UK citizen' to get the approximate positions right.

The body model was initially painted up in athletic kit, and then lit with a simple light set-up in modo. The effects of this lighting were then 'baked' into the texture to give a colour map of how the body looks when lit. This version of the texture was used in the application to help give the impression that the body is a properly lit 3D object but without having to do any of the complicated lighting calculations in Flash.

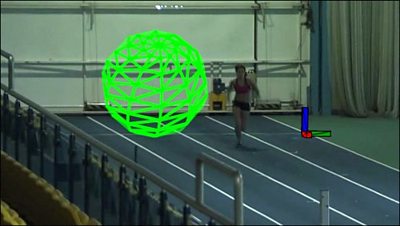

An early test of the app showing the virtual objects placed into the scene. In this version the ball rolled along next to the athlete.

Moving

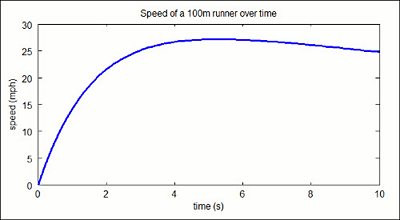

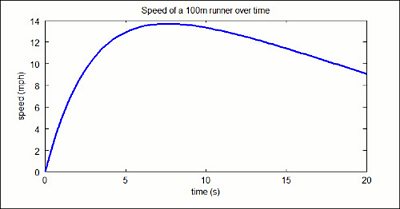

In order to simulate the involvement of the user in the events it was necessarily to make them perform as realistically as possible. To take the example of the 100m race the user would need to accelerate in a similar manner to the other athletes. To create this effect we made use of our work in the field of biomechanics where mathematical models are often applied to simulate the performance of athletes. We created a model to present the movement of the user's athlete down the course of the track, choosing to base our model on Robert Tibshirani's extension of the Hill-Keller model from his 1997 paper "". This produces some equations of motion with several variables, the values of which can vary the profile of the race. We get the user to enter details about their build and time for the 100m, we can then - with some generalisations - solve for the variables and create race profiles such as those illustrated below.

This would be for a world class athlete, taking less than 10 seconds to finish the race and reaching speeds of over 25 miles per hour.

Meanwhile a profile for the rest of us might look more like this second graph, taking 20 seconds to finish and nearly 10 second to reach a top speed of less than 15 miles per hour and starting to slow badly near the end.

Developing the final product

We showed our early prototype to colleagues in Future Media and received a very positive response so we continued the development for potential inclusion on the 2012 website. Paul Golds joined the team to help develop the application user interface and to build the 3D models. Over the next few months - with assistance from our colleagues in FM, particularly the UX&D team - the application turned into the one you see today. As well as being fun, we hope it helps people to understand the athletic achievement that goes into performing at the Olympics. I particularly enjoy entering my own height for the long jump and watching the athlete leap far above it. It also offers a glimpse of possible future technical developments in this area and the potential for putting the tools that were previously the domain of the television sports analysts into the hands of viewers.

We hope you enjoy it!

The finished application in action.

As well as my colleagues in R&D who built the application, Bruce Weir and Paul Golds, I'd like to thank our colleagues in Future Media and 主播大秀 Sport who have been so helpful in getting the application online. I'd particularly like to thank the at Cardiff Metropolitan University for all their help throughout the biomechanics project.

- -

- 主播大秀 R&D - Intelligent Video Production Tools

- 主播大秀 R&D - Olympic Diving 'Splashometer'

- 主播大秀 R&D - Use of 3-D techniques for virtual production

- 主播大秀 R&D - Image-based camera tracking for Athletics

- 主播大秀 R&D - Biomechanics

- 主播大秀 R&D - Piero

- 主播大秀 R&D - The Queen's Award winning Piero sports graphics system

- 主播大秀 Internet Blog - 主播大秀 R&D wins Queen's Award for Enterprise for Piero

- 主播大秀 R&D - Real-time camera tracking using sports pitch markings

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.